There are at least two ways to interpret the title of this post. One interpretation implies that the post is about the effects of cognitive biases in the workplace, and maybe, Cognitive biases are powerful.

They determine, in part, how

we make decisions, how we

interact with one other, and

how well those decisions and

interactions serve us

in the workplace.how to manage those effects. A rewording of the title, consistent with this interpretation, is "Cognitive Biases in the Workplace." A second interpretation is that the post is about noticing when cognitive biases are playing a role in a given situation, and maybe what to do about that or how to prevent it. A rewording of the title, consistent with this second interpretation, might be "When Cognitive Biases Are Playing a Role."

When you saw the title and read it for the first time, the interpretation that came to mind first was determined, in part, by cognitive biases. Cognitive biases are powerful. They determine, in part, how we make decisions, how we interact with one other, and how well those decisions and interactions serve us in the workplace — or anywhere else for that matter.

But the focus of this post is cognitive biases in the workplace.

Let's begin by clearing away some baggage related to the term cognitive bias. The word cognitive isn't (or until recently has not been) a common element of workplace vocabulary. In the context of this discussion, it just means, "of or related to thinking." The real problem with the term cognitive bias is the word bias, which has some very negative connotations. In lay language, bias relates to prejudice and unfairness. That isn't the sense we need for this context. For this context, the bias in question is a systematic skew of our thinking away from evidence-based reasoning.

And that's where the problems arise. At work, we tend to think of ourselves as making decisions and analyzing problems using only the tools of evidence-based reasoning. Although that is what we believe, science tells us another story. When we think and when we make decisions, we use a number of patterns of thinking that transcend — and sometimes exclude — evidence-based reasoning.

Some view these alternate patterns of thinking as "less than" or "subordinate to" or "of lesser value than" evidence-based reasoning. In this view, decisions or analysis performed on the basis of anything other than evidence-based reasoning are questionable and not to be relied upon. In this view, unless we can offer an evidence-based chain of reasoning to justify a decision or analysis, that decision or analysis is near worthless.

I disagree. But first let me offer support for the critics of alternate patterns of thinking.

An example of a cognitive bias in real life

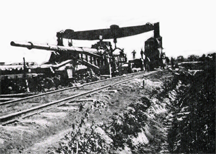

A so-called "Paris Gun" of World War I. Photo courtesy Wikipedia.

The decision by Germany in World War I to target cities instead of logistics assets could have been influenced by the cognitive bias known as the Availability Heuristic. Because it's much easier to imagine destruction of parts of a city than it is to imagine the widely dispersed and unspectacular consequences of disabling a port or railhead, targeting Paris instead of Dover or Calais might have seemed to be more advantageous to the German cause than it actually could have been — or would have been. The Availability Heuristic may have led German strategists astray.

How we benefit from cognitive biases

If cognitive biases lead us to such disadvantageous conclusions, why then do we have cognitive biases? What good are they?

Cognitive biases are not something we have. What we have are patterns of thinking that result in cognitive biases. We have ways of making decisions and analyzing situations that are far more economical and much faster than evidence-based reasoning. And much of the time, the results we achieve with these alternate patterns of thinking are close to what we could have achieved with evidence-based reasoning. In many situations those results are close enough.

The patterns of thinking that exhibit cognitive biases aren't defects. They aren't shortcomings in the design of humans that need to be rooted out and destroyed. On the contrary, they're actually wonderful tools — alternatives to evidence-based reasoning — that get us "pretty fair" results quickly and cheaply much of the time. The defect, if there is one, is our habit of relying on one or more of these alternate patterns of thinking when their results aren't close enough to what we could achieve if we had the time and resources to apply evidence-based reasoning. Or the defect is our habit of relying on them when their results aren't close enough often enough.

But there are hundreds of cognitive biases. Wikipedia lists 196 of them as of this writing. Now it's unlikely that every single one of these identified cognitive biases arises from a single unique alternate pattern of thinking that produces that bias and only that bias. My own guess is that there are many fewer of these alternate patterns of thinking — often called heuristics — and that they exhibit different cognitive biases in different situations.

Even so, we can't possibly manage the risks associated with these alternate patterns of thinking by considering all of the known cognitive biases all the time. We need a way of focusing our risk management efforts to address only the cognitive biases that are most likely to affect particular kinds of decisions or analyses, depending on what we're doing at the moment. In short, we need a heuristic to help us manage the risks of using heuristics. And we'll make a start on that project next time. ![]() Next issue in this series

Next issue in this series ![]() Top

Top ![]() Next Issue

Next Issue

Are your projects always (or almost always) late and over budget? Are your project teams plagued by turnover, burnout, and high defect rates? Turn your culture around. Read 52 Tips for Leaders of Project-Oriented Organizations, filled with tips and techniques for organizational leaders. Order Now!

Footnotes

Your comments are welcome

Would you like to see your comments posted here? rbrenaXXxGCwVgbgLZDuRner@ChacDjdMAATPdDNJnrSwoCanyon.comSend me your comments by email, or by Web form.About Point Lookout

Thank you for reading this article. I hope you enjoyed it and

found it useful, and that you'll consider recommending it to a friend.

Thank you for reading this article. I hope you enjoyed it and

found it useful, and that you'll consider recommending it to a friend.

This article in its entirety was written by a human being. No machine intelligence was involved in any way.

Point Lookout is a free weekly email newsletter. Browse the archive of past issues. Subscribe for free.

Support Point Lookout by joining the Friends of Point Lookout, as an individual or as an organization.

Do you face a complex interpersonal situation? Send it in, anonymously if you like, and I'll give you my two cents.

Related articles

More articles on Cognitive Biases at Work:

Effects of Shared Information Bias: II

Effects of Shared Information Bias: II- Shared information bias is widely recognized as a cause of bad decisions. But over time, it can also

erode a group's ability to assess reality accurately. That can lead to a widening gap between reality

and the group's perceptions of reality.

Perfectionism and Avoidance

Perfectionism and Avoidance- Avoiding tasks we regard as unpleasant, boring, or intimidating is a pattern known as procrastination.

Perfectionism is another pattern. The interplay between the two makes intervention a bit tricky.

Seven Planning Pitfalls: III

Seven Planning Pitfalls: III- We usually attribute departures from plan to poor execution, or to "poor planning." But one

cause of plan ineffectiveness is the way we think when we set about devising plans. Three cognitive

biases that can play roles are the so-called Magical Number 7, the Ambiguity Effect, and the Planning Fallacy.

Mental Accounting and Technical Debt

Mental Accounting and Technical Debt- In many organizations, technical debt has resisted efforts to control it. We've made important technical

advances, but full control might require applying some results of the behavioral economics community,

including a concept they call mental accounting.

Lessons Not Learned: II

Lessons Not Learned: II- The planning fallacy is a cognitive bias that causes us to underestimate the cost and effort involved

in projects large and small. Efforts to limit its effects are more effective when they're guided by

interactions with other cognitive biases.

See also Cognitive Biases at Work for more related articles.

Forthcoming issues of Point Lookout

Coming October 1: On the Risks of Obscuring Ignorance

Coming October 1: On the Risks of Obscuring Ignorance- When people hide their ignorance of concepts fundamental to understanding the issues at hand, they expose their teams and organizations to a risk of making wrong decisions. The organizational costs of the consequences of those decisions can be unlimited. Available here and by RSS on October 1.

And on October 8: Responding to Workplace Bullying

And on October 8: Responding to Workplace Bullying- Effective responses to bullying sometimes include "pushback tactics" that can deter perpetrators from further bullying. Because perpetrators use some of these same tactics, some people have difficulty employing them. But the need is real. Pushing back works. Available here and by RSS on October 8.

Coaching services

I offer email and telephone coaching at both corporate and individual rates. Contact Rick for details at rbrenaXXxGCwVgbgLZDuRner@ChacDjdMAATPdDNJnrSwoCanyon.com or (650) 787-6475, or toll-free in the continental US at (866) 378-5470.

Get the ebook!

Past issues of Point Lookout are available in six ebooks:

- Get 2001-2 in Geese Don't Land on Twigs (PDF, )

- Get 2003-4 in Why Dogs Wag (PDF, )

- Get 2005-6 in Loopy Things We Do (PDF, )

- Get 2007-8 in Things We Believe That Maybe Aren't So True (PDF, )

- Get 2009-10 in The Questions Not Asked (PDF, )

- Get all of the first twelve years (2001-2012) in The Collected Issues of Point Lookout (PDF, )

Are you a writer, editor or publisher on deadline? Are you looking for an article that will get people talking and get compliments flying your way? You can have 500-1000 words in your inbox in one hour. License any article from this Web site. More info

Follow Rick

Recommend this issue to a friend

Send an email message to a friend

rbrenaXXxGCwVgbgLZDuRner@ChacDjdMAATPdDNJnrSwoCanyon.comSend a message to Rick

![]() A Tip A Day feed

A Tip A Day feed

![]() Point Lookout weekly feed

Point Lookout weekly feed

My blog, Technical Debt for Policymakers, offers

resources, insights, and conversations of interest to policymakers who are concerned with managing

technical debt within their organizations. Get the millstone of technical debt off the neck of your

organization!

My blog, Technical Debt for Policymakers, offers

resources, insights, and conversations of interest to policymakers who are concerned with managing

technical debt within their organizations. Get the millstone of technical debt off the neck of your

organization!