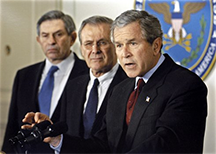

Left to right, Deputy Secretary of Defense Paul Wolfowitz, Defense Secretary Donald H. Rumsfeld, and President George W. Bush, conduct a press conference on September 17, 2001, at the Pentagon. They had met to continue planning an appropriate military response to the al Qaeda threat. They held a press conference after the meeting. Certainly, over the months and years following, this team and many other members of the administration underestimated the scale of the problems they faced. Mr. Wolfowitz, in particular, expressed views far more optimistic than — and contrary to — the evidence-based views of many experts. One of his most often quoted comments about the cost of the Iraq War is that in the case of Iraq, the United States is dealing with "a country that can really finance its own reconstruction and relatively soon." Defense Department photo by R. D. Ward.

Overconfidence is the state of having too much confidence — confidence beyond levels justified by evidence. One trouble with that definition is that it provides little useful insight: how much is too much? A second trouble is that it exemplifies itself, in that it presumes that levels of confidence can be assigned with, um, confidence. Often we can do no such thing. Situations affected by overconfidence include hiring, making strategic choices, chartering projects, cancelling projects — indeed, most workplace decision making. Despite the vagueness of the concept of overconfidence we can make useful conclusions, if we examine the concept more closely.

That's what Don Moore and Paul Healy did in a 2008 paper — cited in over 800 other works (according to Google Scholar), which is a goodly number for such a short time. [Moore & Healy 2008] The authors note that conflicting results in overconfidence research can be resolved when one realizes that the term overconfidence had been used to denote three different classes of judgment errors. They are:

- Overestimation: assessing as too high one's actual ability, performance, level of control, or chance of success.

- Overplacement: the belief that one is better than others, such as when a majority of people rate themselves "better than average."

- Overprecision: excessive certainty regarding the accuracy of one's beliefs.

These tendencies are not character flaws. Rather, they arise from the state of being human — not in the sense of "to err is human," but, as a direct consequence of human psychology.

What is surprising is how little we do in organizations to protect ourselves and the organization from the effects of overconfidence. Indeed, some of our behaviors and policies actually induce overconfidence. Here are three examples.

- Unrealistic assessments of the capabilities of others

- A phenomenon known as the Dunning-Kruger Effect causes us to confuse competence and confidence. [Kruger & Dunning 1999] That is, we assess people as more capable when they project confidence, and inversely, less capable when they project uncertainty. This can lead to decision-making errors in hiring, and in evaluating the advice we receive from subordinates, consultants, experts, and the media.

- Unrealistic standards of precision

- When we Situations affected by overconfidence

include hiring, making strategic choices,

chartering or cancelling projects —

indeed, most workplace decision makingevaluate projected performance for projects or business units, we require alignment between projections and actuals. The standards we apply when we assess performance typically exceed by far any reasonable expectations of the precision of those projections. This behavior encourages those making projections to commit the overprecision error. - Unrealistic risk appetite

- Assessments of success in the context of risk, and our ability to mitigate risk, are subject to overestimation errors. By overestimating our chances of success, and our ability to deal with adversity, we repeatedly subject ourselves to higher levels of risk than we realize.

Cognitive biases that contribute to overconfidence in its various forms include, among others, the planning fallacy, optimism bias, illusory superiority, and, of course, the overconfidence effect. Most important, the bias blind spot causes us to be overconfident about the question of whether we ourselves are ever overconfident. We surely are. At least, I think so. ![]() Top

Top ![]() Next Issue

Next Issue

Is every other day a tense, anxious, angry misery as you watch people around you, who couldn't even think their way through a game of Jacks, win at workplace politics and steal the credit and glory for just about everyone's best work including yours? Read 303 Secrets of Workplace Politics, filled with tips and techniques for succeeding in workplace politics. More info

For an extensive investigation of the role of overconfidence in governmental policies that lead to war, see Dominic D. P. Johnson, Overconfidence and War: The Havoc and Glory of Positive Illusions, Cambridge, Massachusetts: Harvard University Press, 2004. Order from Amazon.com.

More about the Dunning-Kruger Effect

The Paradox of Confidence [January 7, 2009]

The Paradox of Confidence [January 7, 2009]- Most of us interpret a confident manner as evidence of competence, and a hesitant manner as evidence of lesser ability. Recent research suggests that confidence and competence are inversely correlated. If so, our assessments of credibility and competence are thrown into question.

How to Reject Expert Opinion: II [January 4, 2012]

How to Reject Expert Opinion: II [January 4, 2012]- When groups of decision makers confront complex problems, and they receive opinions from recognized experts, those opinions sometimes conflict with the group's own preferences. What tactics do groups use to reject the opinions of people with relevant expertise?

Devious Political Tactics: More from the Field Manual [August 29, 2012]

Devious Political Tactics: More from the Field Manual [August 29, 2012]- Careful observation of workplace politics reveals an assortment of devious tactics that the ruthless use to gain advantage. Here are some of their techniques, with suggestions for effective responses.

Wishful Thinking and Perception: II [November 4, 2015]

Wishful Thinking and Perception: II [November 4, 2015]- Continuing our exploration of causes of wishful thinking and what we can do about it, here's Part II of a little catalog of ways our preferences and wishes affect our perceptions.

Wishful Significance: II [December 23, 2015]

Wishful Significance: II [December 23, 2015]- When we're beset by seemingly unresolvable problems, we sometimes conclude that "wishful thinking" was the cause. Wishful thinking can result from errors in assessing the significance of our observations. Here's a second group of causes of erroneous assessment of significance.

Cognitive Biases and Influence: I [July 6, 2016]

Cognitive Biases and Influence: I [July 6, 2016]- The techniques of influence include inadvertent — and not-so-inadvertent — uses of cognitive biases. They are one way we lead each other to accept or decide things that rationality cannot support.

The Paradox of Carefully Chosen Words [November 16, 2016]

The Paradox of Carefully Chosen Words [November 16, 2016]- When we take special care in choosing our words, so as to avoid creating misimpressions, something strange often happens: we create a misimpression of ignorance or deceitfulness. Why does this happen?

Risk Acceptance: One Path [March 3, 2021]

Risk Acceptance: One Path [March 3, 2021]- When a project team decides to accept a risk, and when their project eventually experiences that risk, a natural question arises: What were they thinking? Cognitive biases, other psychological phenomena, and organizational dysfunction all can play roles.

Cassandra at Work [April 13, 2022]

Cassandra at Work [April 13, 2022]- When a team makes a wrong choice, and only a tiny minority advocated for what turned out to have been the right choice, trouble can arise when the error at last becomes evident. Maintaining team cohesion can be a difficult challenge for team leaders.

Embedded Technology Groups and the Dunning-Kruger Effect [March 12, 2025]

Embedded Technology Groups and the Dunning-Kruger Effect [March 12, 2025]- Groups of technical specialists in fields that differ markedly from the main business of the enterprise that hosts them must sometimes deal with wrong-headed decisions made by people who think they know more about the technology than they actually do.

Footnotes

Your comments are welcome

Would you like to see your comments posted here? rbrenaXXxGCwVgbgLZDuRner@ChacDjdMAATPdDNJnrSwoCanyon.comSend me your comments by email, or by Web form.About Point Lookout

Thank you for reading this article. I hope you enjoyed it and

found it useful, and that you'll consider recommending it to a friend.

Thank you for reading this article. I hope you enjoyed it and

found it useful, and that you'll consider recommending it to a friend.

This article in its entirety was written by a human being. No machine intelligence was involved in any way.

Point Lookout is a free weekly email newsletter. Browse the archive of past issues. Subscribe for free.

Support Point Lookout by joining the Friends of Point Lookout, as an individual or as an organization.

Do you face a complex interpersonal situation? Send it in, anonymously if you like, and I'll give you my two cents.

Related articles

More articles on Personal, Team, and Organizational Effectiveness:

How We Avoid Making Decisions

How We Avoid Making Decisions- When an important item remains on our To-Do list for a long time, it's possible that we've found ways

to avoid facing it. Some of the ways we do this are so clever that we may be unaware of them. Here's

a collection of techniques we use to avoid engaging difficult problems.

Poverty of Choice by Choice

Poverty of Choice by Choice- Sometimes our own desire not to have choices prevents us from finding creative solutions. Life

can be simpler (if less rich) when we have no choices to make. Why do we accept the same tired solutions,

and how can we tell when we're doing it?

Virtual Communications: II

Virtual Communications: II- Participating in or managing a virtual team presents special communications challenges. Here's Part

II of some guidelines for communicating with members of virtual teams.

How to Deal with Holding Back

How to Deal with Holding Back- When group members voluntarily restrict their contributions to group efforts, group success is threatened

and high performance becomes impossible. How can we reduce the incidence of holding back?

Time Slot Recycling: The Risks

Time Slot Recycling: The Risks- When we can't begin a meeting because some people haven't arrived, we sometimes cancel the meeting and

hold a different one, with the people who are in attendance. It might seem like a good way to avoid

wasting time, but there are risks.

See also Personal, Team, and Organizational Effectiveness for more related articles.

Forthcoming issues of Point Lookout

Coming October 1: On the Risks of Obscuring Ignorance

Coming October 1: On the Risks of Obscuring Ignorance- A common dilemma in knowledge-based organizations: ask for an explanation, or "fake it" until you can somehow figure it out. The choice between admitting your own ignorance or obscuring it can be a difficult one. It has consequences for both the choice-maker and the organization. Available here and by RSS on October 1.

And on October 8: Responding to Workplace Bullying

And on October 8: Responding to Workplace Bullying- Effective responses to bullying sometimes include "pushback tactics" that can deter perpetrators from further bullying. Because perpetrators use some of these same tactics, some people have difficulty employing them. But the need is real. Pushing back works. Available here and by RSS on October 8.

Coaching services

I offer email and telephone coaching at both corporate and individual rates. Contact Rick for details at rbrenaXXxGCwVgbgLZDuRner@ChacDjdMAATPdDNJnrSwoCanyon.com or (650) 787-6475, or toll-free in the continental US at (866) 378-5470.

Get the ebook!

Past issues of Point Lookout are available in six ebooks:

- Get 2001-2 in Geese Don't Land on Twigs (PDF, )

- Get 2003-4 in Why Dogs Wag (PDF, )

- Get 2005-6 in Loopy Things We Do (PDF, )

- Get 2007-8 in Things We Believe That Maybe Aren't So True (PDF, )

- Get 2009-10 in The Questions Not Asked (PDF, )

- Get all of the first twelve years (2001-2012) in The Collected Issues of Point Lookout (PDF, )

Are you a writer, editor or publisher on deadline? Are you looking for an article that will get people talking and get compliments flying your way? You can have 500-1000 words in your inbox in one hour. License any article from this Web site. More info

Follow Rick

Recommend this issue to a friend

Send an email message to a friend

rbrenaXXxGCwVgbgLZDuRner@ChacDjdMAATPdDNJnrSwoCanyon.comSend a message to Rick

![]() A Tip A Day feed

A Tip A Day feed

![]() Point Lookout weekly feed

Point Lookout weekly feed

My blog, Technical Debt for Policymakers, offers

resources, insights, and conversations of interest to policymakers who are concerned with managing

technical debt within their organizations. Get the millstone of technical debt off the neck of your

organization!

My blog, Technical Debt for Policymakers, offers

resources, insights, and conversations of interest to policymakers who are concerned with managing

technical debt within their organizations. Get the millstone of technical debt off the neck of your

organization!